Carlin Wiegner > AI > Backpropogation

Backpropogation

3 Blue 1 Brown Part 1

What is backpropagation really doing? | Chapter 3, Deep learning

- Good first video - not very technical

- Good explanation of Stochastic gradient descent versus gradient descent.

Andrej Karpathy

The spelled-out intro to neural networks and backpropagation:

building micrograd

- Micrograd is a scalar (a numerical value, indicating the magnitude of something) value autograd engine

- Autograd engine - automatic gradient - implements backproposition

- Backpropogation allows you to efficiently evaluate the gradient of some kind of loss function

- That lets us iterative tune the weights to minimize the loss function

- The mathematical core of any deep learning neural network

- Start at the end and recursively apply the chain rule from calculus

- By calculating all the derivatives of a mathematical expression, it can tell you if you slightly nudge a node's weights by how much it will change final output.

- Neural networks are just mathematical expression

- Neural networks happen to be a certain class of mathematical expression

- Backpropogation is much more general. It doesn't carry about neural networks

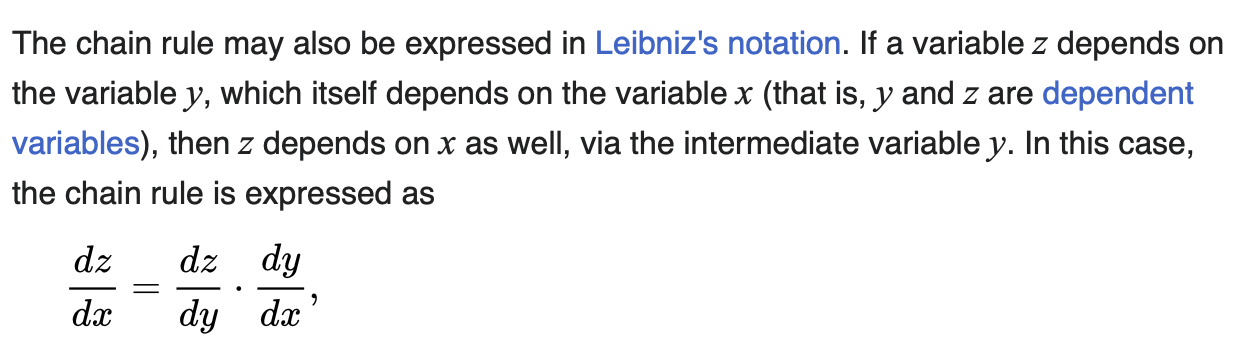

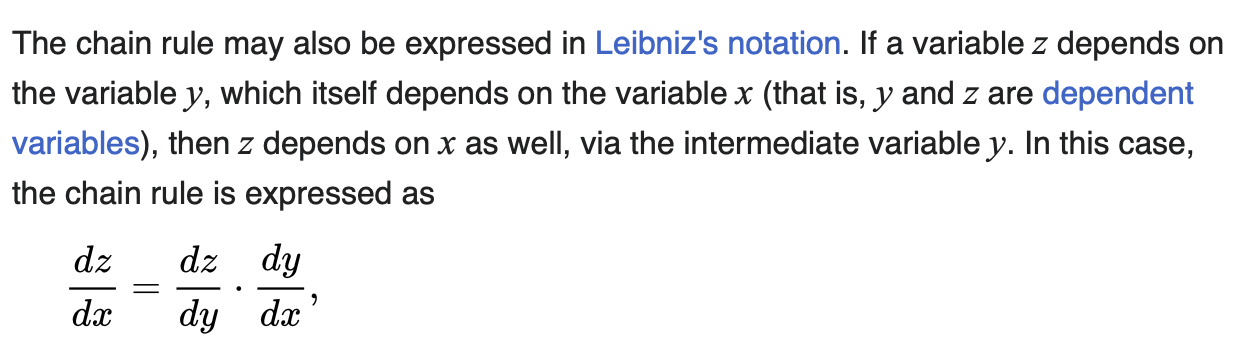

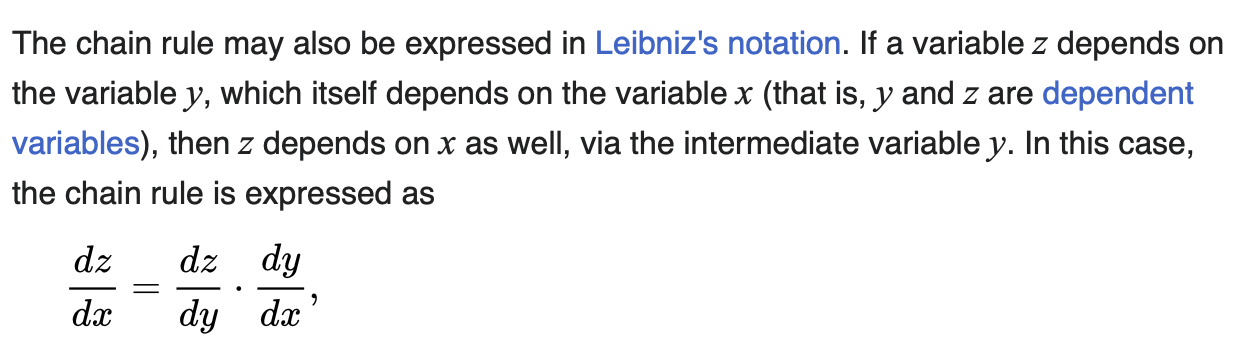

- Chain rule

- Notes stop at 56:19

Which weights are adjusted?

All.

Won't this introduce regressions in neural circuits who have a good loss function?

Yes. It will. You're really becoming better at all things in your training set versus improving drastically at one quickly.

Learning Rate - The actual size of each weight update is scaled by a learning rate hyperparameter.

This controls how large each adjustment step should be.

Importantly, backpropagation doesn't selectively choose individual weights to update. Instead, it

calculates updates for all weights simultaneously. Each weight is adjusted proportionally to its

contribution to the error.

3 Blue 1 Brown, Part 2 - heavier math

Backpropagation calculus | Chapter 4, Deep learning

Created: September 17 2024.

Modified: September 25 2024.